Critical Thinking: Credence and Veracity

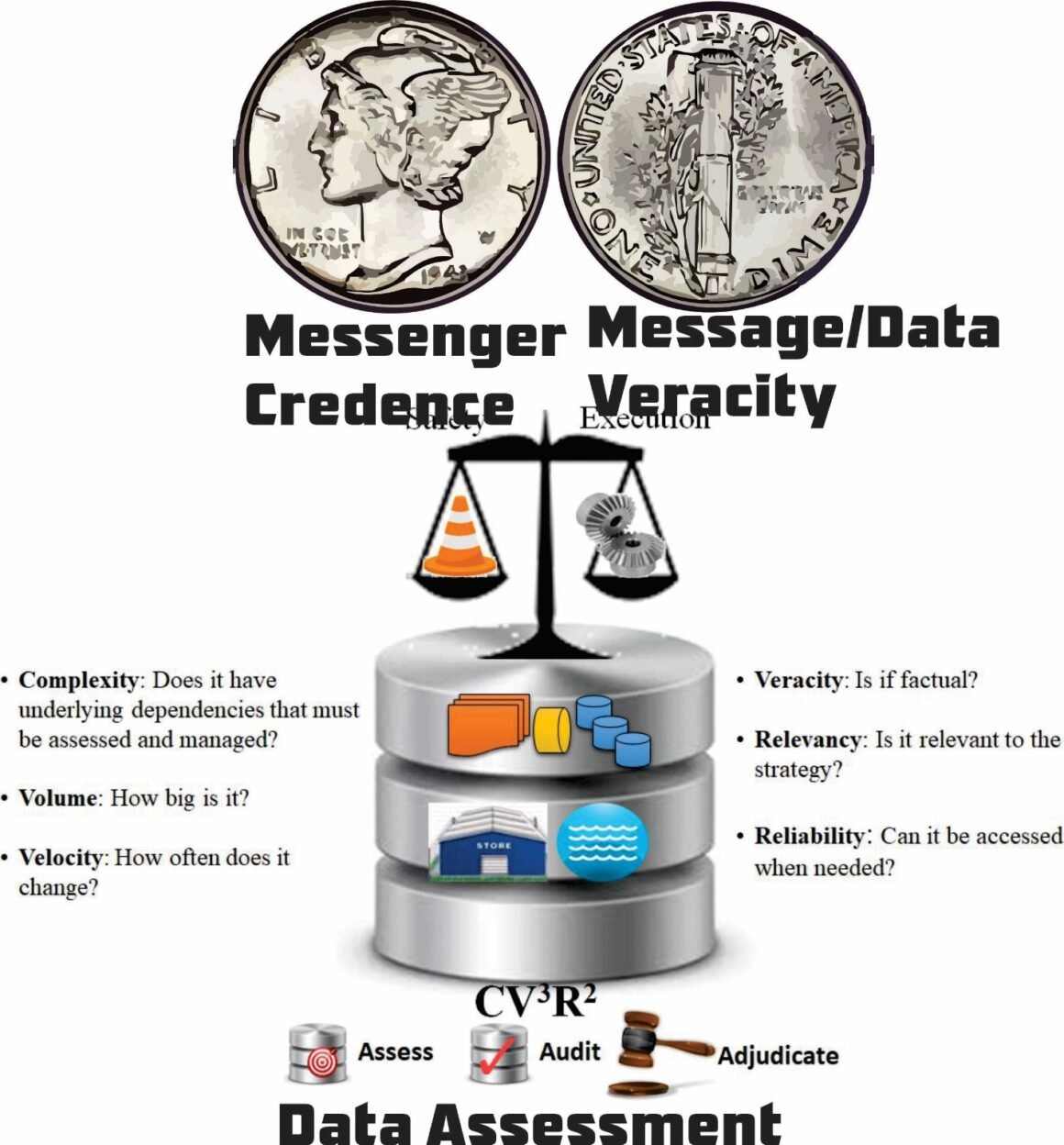

Critical Thinking and Policy Development and Analysis introduced the concepts of credence and veracity and said they were two sides of the same coin. The coin’s value depends on its credence and veracity. The figure above aligns credence with the messenger and veracity with the message. It also adds a data assessment piece, since messages are often based on some form of data and (hopefully) information and actionable knowledge. I discuss the CV3R2 framework in Thrive in the Age of Knowledge.

As discussed in Critical Thinking and Policy Development and Analysis, credence determines how much we believe the messenger, and veracity is how much we trust the message. Both require fact-based approaches and consistency. The fact-based approach requires reliable data that analysts and data scientists readily search and processes and tools to use the data to turn it into information and actionable knowledge. Actionable knowledge requires:

- Relevant data

- Understanding of the data, the environment, and the problem/issue

- Appropriate and effective knowledge of how and when to apply information

- Wisdom to effectively apply knowledge at the right time and place

Actionable knowledge is a little different from the typical knowledge management (KM) approach. Actionable knowledge includes the ability to apply knowledge, which implies more than a simple information library and search criteria. It also implies systems that can associate information with problems and issues, help the analyst understand them and the situation, and training in knowledge application. It requires both data scientists and subject matter experts working seamlessly together and with decision-makers. Actionable knowledge is critical to effective policy development and assessment.

Credence is a function of:

- Messenger experience. Does the messenger have relevant experience and the ability to translate this experience into effective analysis and conclusions?

- Past messaging. Does the messenger have a history of effective analysis and conclusions or have many of the messenger’s conclusions proved flawed?

- Opinion or fact-based. Does the messenger write opinions masked as fact-based analysis?

- The messenger’s organization. Are they partisan or objective? What is the organization’s mission and objective?

Veracity is a function of:

- Sources. Does the message cite its sources? Are the sources reliable and, where appropriate, peer reviewed? The more reliable the sources, the higher the veracity.

- Opinion vs. Fact. Is the message opinion or is a fact-based analysis? The higher the opinion quotient, the lower the veracity. The exception is a hypothesis that the message either provides information and analysis to disprove/prove or at least frames the process for this work.

- Reliability. Do the message conclusions hold up or are they consistently wrong or only partially correct? This requires post hoc analysis months and potentially years after the message. This can also be a function of the messenger/messenger organization. What is their track record?

- Logic. Is the internal flow sound? Can develop a deductive syllogism based on the structure? Are the major and minor premises valid?

- Memes. Does the message use trigger words and images to appeal to the emotions and manipulate subconscious processes? The higher the use of memes, the lower the veracity.

The data validity CV3R2 Framework for data is based on:

- Complexity. Complexity is a measure of how easy it is to understand the date and manage and respond to changes in the data set. A simple table is easy to digest. A thousand tables with hundreds of foreign keys within the data structure and links to external data are a very different matter. Complexity is not good or bad. A very valuable data set may be extremely complex. The issue of complexity goes to the resources required for the initial assessment and to maintain it.

- Volume. Volume is a measure of the size of the data set. While on the surface, this seems like a small concern, but as data scales up to hundreds of exabytes of unstructured data and sizes of data in social networking sites such as Facebook grow ever larger with unstructured data, just handling the data may be difficult.

- Veracity. Veracity measures the data integrity and the degree of confidence for internal processes and decision-making. Integrity is a function of accuracy and consistency. Issues with accuracy include incorrect facts, speculation passed off as facts, or old data that was once accurate, but no longer is factually correct. Issues with consistency include using the same data source multiple times with different values or multiple versions of authoritative data.

- Relevancy. Relevancy measures the degree of correlation to the organization’s strategic and operational plans and execution. Even High Confidence data may not be useful if it is not related to the organization.

- Reliability. Reliability measures the ease and ability to access data. Data that may have high confidence and high relevance may still be of limited use if it is not accessible when needed.

There are problems with creditability and veracity on both sides of the political divide. For example:

- I have lost count of right of center pundits proclaiming this message/event slams Democrats or the hammer is coming. The hammer never drops.

- I get at least five emails a day taking some event, sensationalizing it, and then asking for money. Limited or no results ever seem to happen.

- Reliance on memes and trigger phrases to manipulate and inflame their base and raise money.

With both sides engaging in these practices to manipulate the electorate, we cannot rely on any partisan group, governmental organization, or political party to assess credence and veracity. Voters need to do it themselves or we need a reliable center that provides non-partisan assessments. Given the educational issues we have today and the limited instruction in critical thinking, we need to do two things:

- Establish a center with the tools and capacity to assess the media. Analysts can use the dimensions in the three areas above to score the messenger’s credence, the messages veracity, and the data validity. This can be as simple as a 1-5 or 1-10 assessed score on each dimension or a far more elaborate machine learning (ML) approach. Either way, the messenger’s credence must be continually assessed. Formerly high credence messengers can lose credence if they become more partisan or use tainted messages. A funded center can use ML to crawl through the web looking for messages and messengers and assess them automatically. There are a few sites now that at least look at the political bias of organizations and try to effectively report them. A ML approach can provide a more effective approach and potentially limit bias in assessment. Let us not kid ourselves though. ML relies on rules and rules can have built in bias. The center needs to make its rule sets publicly available and transparent.

- Strengthen our education system and follow the P21 and other recommendations to improve critical thinking and other key aspects of education.