Critical Thinking: Introduction to Key Components and Dimensions

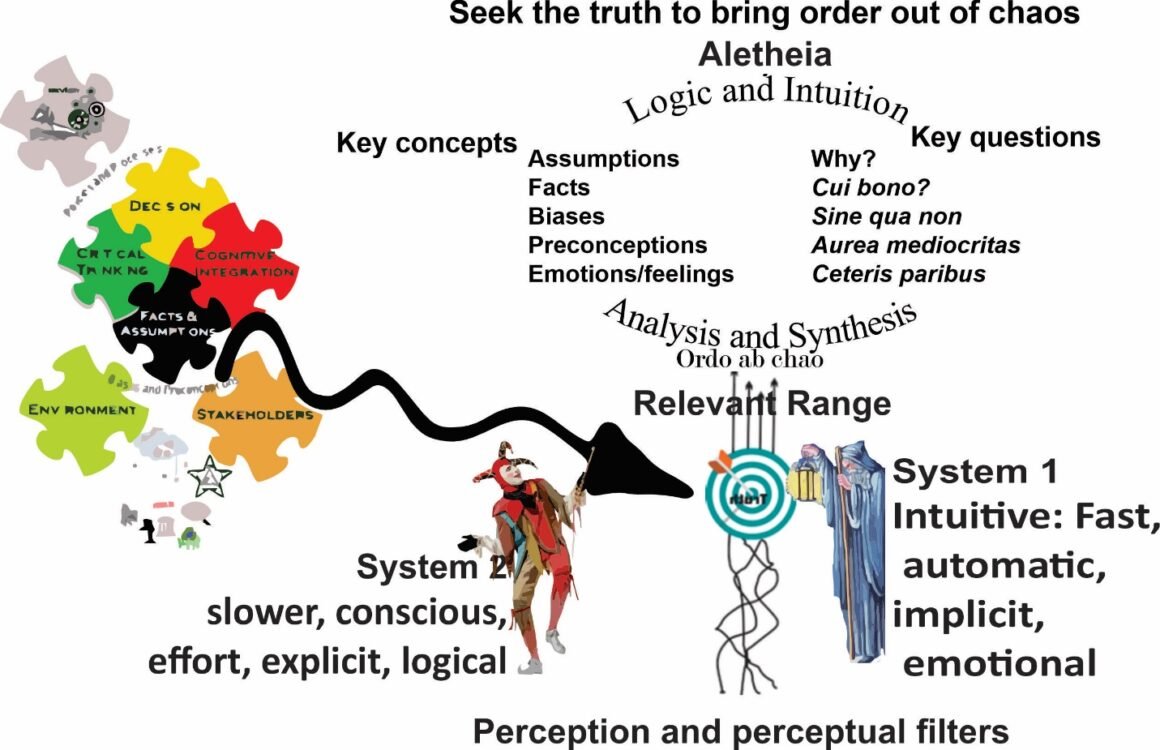

This series will address the components and dimensions of the figure above. The first installment summarizes the critical thinking framework. Subsequent installments will look at the components and dimensions in greater detail.

This is an important and complex topic. The environment in which our government and corporations operate is increasingly complex, with many dynamic variables. We are not well served when our education institution either does not teach critical thinking at all or reduces it to biases and preconceptions. Biases and preconceptions are important, but only one dimension (the perception and perceptual filters) of critical thinking. Both sides of the political divide—if they seek effective governance and policies—need to understand and use critical thinking. Not to put too fine a point on the topic, but those who seek to dilute or otherwise sidetrack critical thinking are perhaps more interested in political agendas than they are about effective governance.

There are three key dimensions to critical thinking:

- Perception and perceptual filters

- Conscious deliberate thought: System 2

- Subconscious intuitive thought: System 1

Perceptions and perceptual filters are the realm of biases, preconceptions, and data collection tools and processes. We need to understand how we gather data and which data we ignore, either because it does not fit our expectations, or our tools and processes are blind to it.

System 1 (represented by the Hermit) and System 2 (represented by the Jester) thinking are the cognitive processes that ask the questions in the lead figure and engage processes and tools. System 1 operates on the intuitive/subconscious level and relies on heuristics. System 2 operates on the conscious/deliberate level and employs deliberate processes and tools. Daniel Kahneman developed this model and he and others estimate that perhaps 90% of decisions are made by System 1. Following pieces will address these two methods in greater detail.

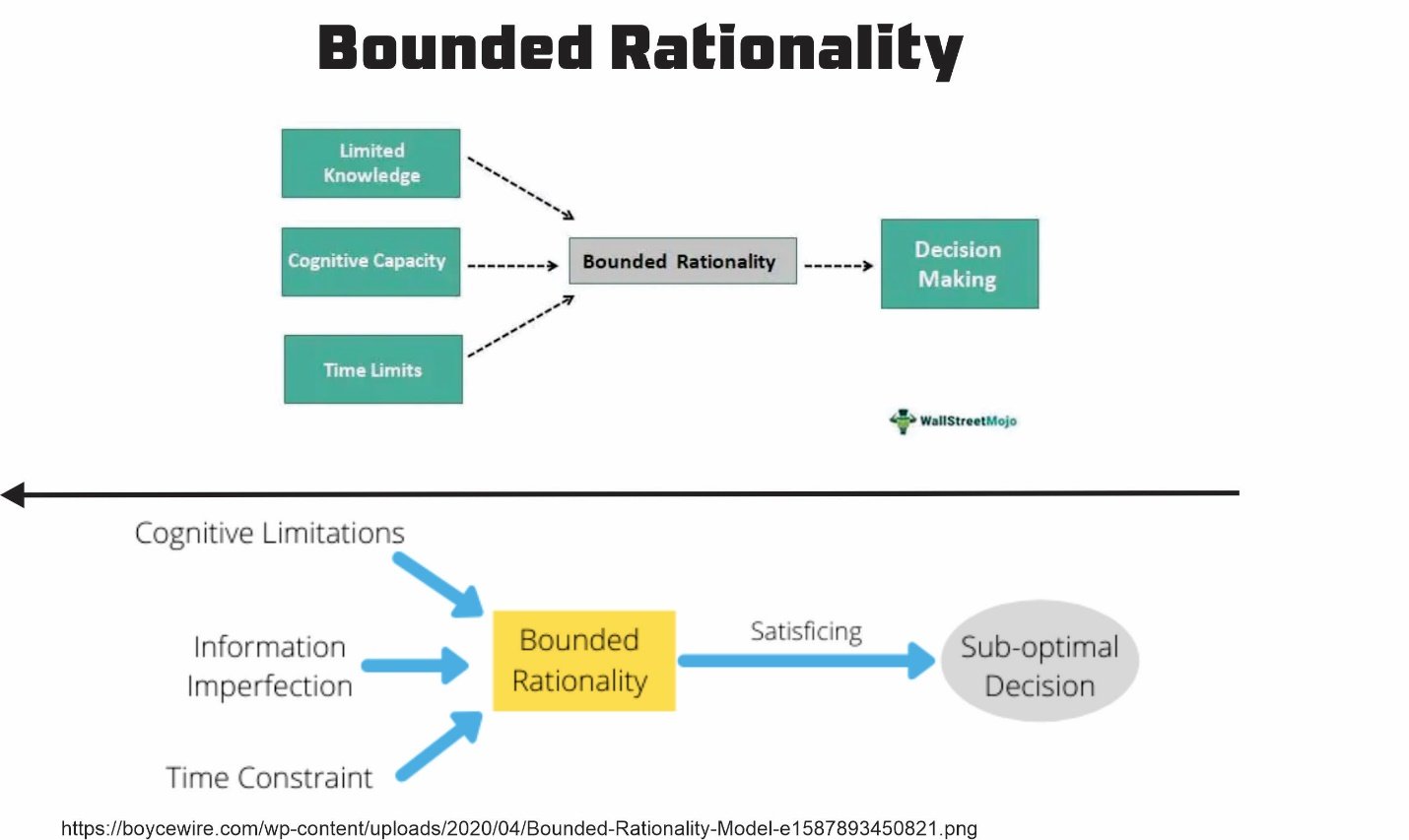

Together, these three dimensions make up the left-hand sections of the Bounded Rationality model shown below.

System 1 and System 2 thinking compromise our cognitive aspects, and skills and abilities determine the relevant cognitive limitations. Biases and data collection and analysis determine the degree of information imperfection. Even if we collect the relevant data, our processes may not recognize the value and turn it into the information we need.

System 1 and System 2 thinking compromise our cognitive aspects, and skills and abilities determine the relevant cognitive limitations. Biases and data collection and analysis determine the degree of information imperfection. Even if we collect the relevant data, our processes may not recognize the value and turn it into the information we need.

That leaves time constraints. The tighter the time constraint, the greater the reliance on System 1 thinking. The greater the reliance on System 1, creates a greater reliance on intuition honed by experience. But experience is based on history. What happens when the situation differs from historical experience and the heuristics that planners and decision-makes less relevant, misleading, or even completely wrong? How do we asses the bounds to our rationality?

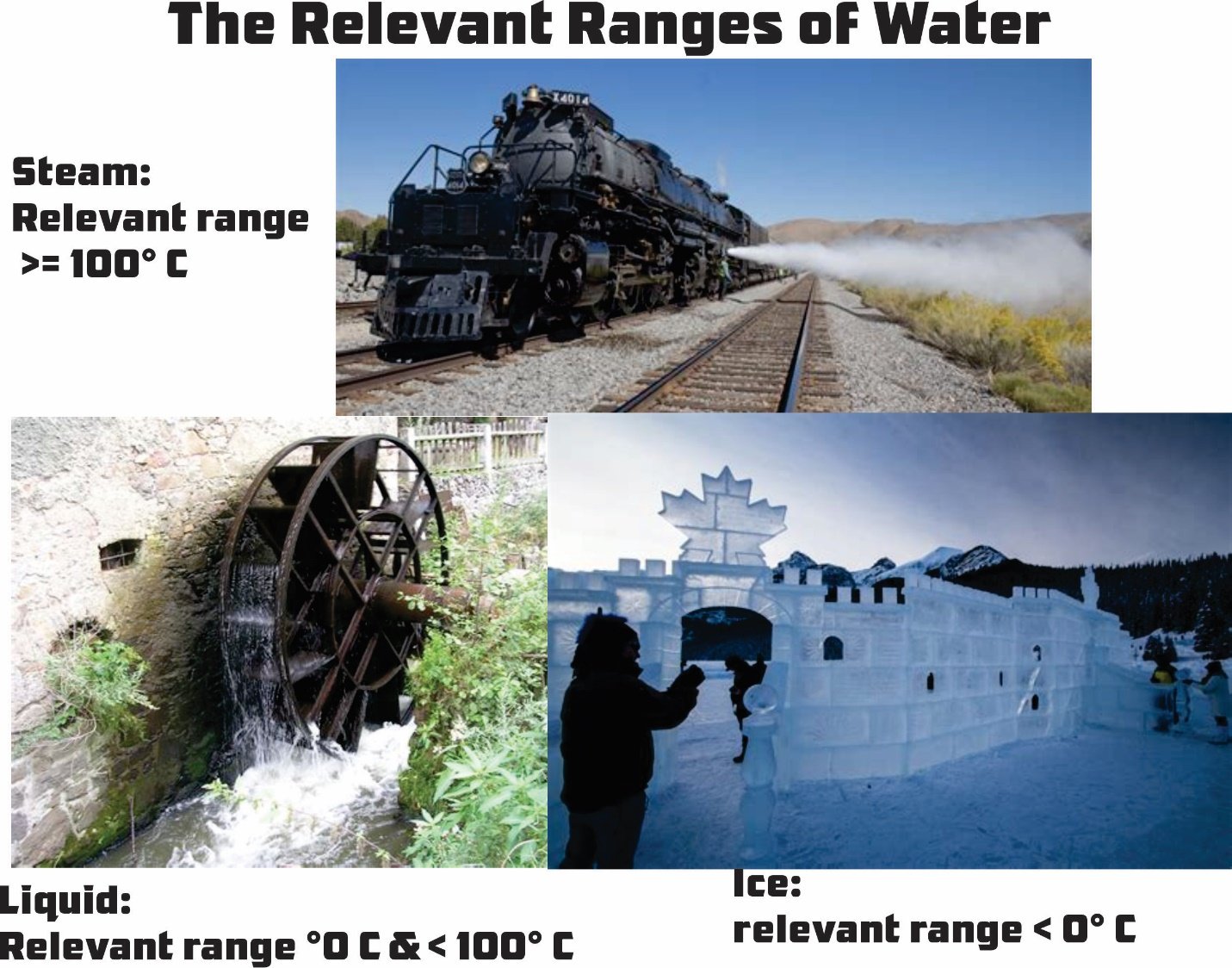

We must assess these dimensions within the relevant ranges to determine the effects of assumptions, facts, and even critical questions. To help understand this point, let us look at the example with water shown below.

We must assess these dimensions within the relevant ranges to determine the effects of assumptions, facts, and even critical questions. To help understand this point, let us look at the example with water shown below.

For most situations, water is a liquid, but that is only valid with the relevant range of 0° C to 100° C. Below that, water is a solid and above it, it is a gas. The closer we get to the boundaries of the relevant range, the less certain we are of facts and assumptions. To an extent, every fact involves the assumption that we are operating within the fact’s relevant range.

Understanding relevant ranges requires a deep understanding of the situation and the dynamics of facts, assumptions, and questions. Some relevant ranges, like the water example, are easy to understand and see. Others are far more complex and difficult to understand. Scenario planning and sensitivity analysis is often an excellent tool for understanding the relevant ranges and what happens as the situation nears the borders of the range or crosses them. But scenario analysis depends on effective models. Planners need to constantly evaluate the models and test them. Those that treat models as black boxes are at risk of failure in complex situations that outside of the relevant range.

The first step in critical thinking needs to understand the boundary conditions of our rational decision-making as we as our intuition and heuristics. Thus, the first question should be how is this situation similar to and different from our historical experience? The second question is, do we need to expand these boundaries? The third is, how do we extend them?

The key concepts and questions in the opening figure help us frame and address these three questions and then dig further into the data to turn it into information and then actionable knowledge.