Critical Thinking: Decisions and System1 System2 Thinking

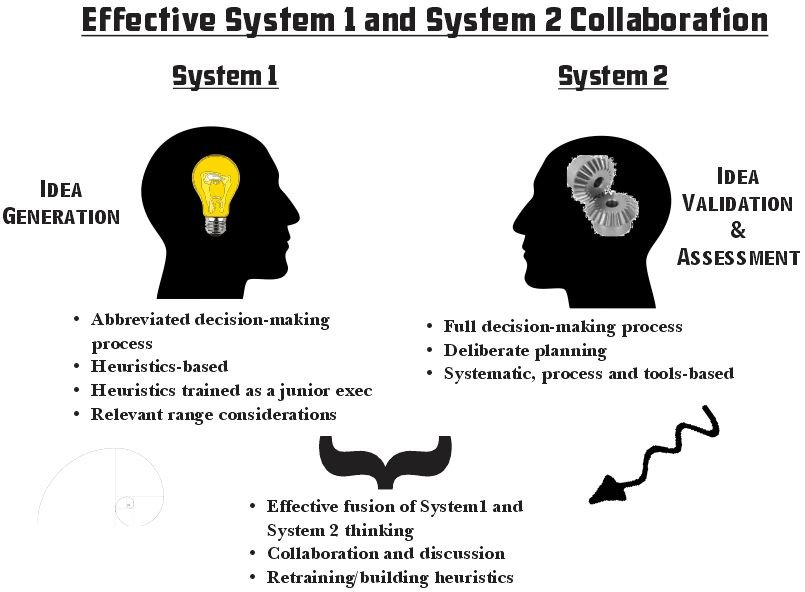

Figure 1 Integrated System 1 and System 2 Approach

Daniel Kahneman identified System 1 and System 2 as two different ways to think through issues and solve problems. System 1 is quick and intuitive. System 2 is slower and process knowledge driven. Good teams fuse the two approaches together that leverages the strengths of both, as shown in Figure 1. Stankovich and West (2000, p. 658) define System 1 and System 2 as:

“System 1 is characterized as automatic, largely unconscious, and relatively undemanding of computational capacity. Thus, it conjoins properties of automaticity and heuristic processing as these constructs have been variously discussed in the literature.”

“System 2 conjoins the various characteristics that have been viewed as typifying controlled processing. System 2 encompasses the processes of analytic intelligence that have traditionally been studied by information processing theorists trying to uncover the computational components underlying intelligence.”

As part of their discussion on Knowledge Management facilitation of decision-making, McKenzie et al. discuss three types of decisions (McKenzie et al., 2011, p. 406) :

- “Simple decisions are not necessarily easy decisions, but cause-and-effect linkages are readily identifiable and action produces a foreseeable outcome.

2. Complicated decisions arise less frequently. Cause and effect linkages are still identifiable, but it is harder. It takes expertise to make sense of the situation and evaluate options.

3. Complex decisions have no right answers. Although infrequent, they have big consequences. Cause and effect are indeterminable because of the interdependent factors and influences. The outcome of actions is unpredictable; patterns can only be identified in retrospect.”

These three categories provide a useful way to bin decisions and potentially govern how decision systems manage and processes them.

The System 1 decisions are based on heuristics, which are tied to intuition. That is why the figure in the piece on critical thinking and time uses the Hermit icon. The Hermit seeks to cast light on the darkness and understand our intuition and the value of the heuristics it implicitly uses. Effective organizations understand they make many System 1 decisions and try to understand the heuristics and whether the situation is within their relevant range.

The System 2 decisions are based on decision science and processes. However, they almost always include assumptions to span information gaps. The Jester Icon reminds us to test and validate these assumptions. In a medieval court, the Jester was often the only one who could question a decision, because he did it through humor and the ruler could readily deflect the humor, but still get the message. Culture is critical in how the Jester in an organization functions. If someone does not question the decisions, the organization could use flawed assumptions that could lead to poor decisions.

| Table 1 Decision Types and Approaches | ||

| Decision Type | System Type | Approach |

| Simple | System 1 | Validate relevant range. |

| Complicated | System 1 and System 2 | Establish relevant rages. Establish hypothesis with System 1. Analyze and test hypothesis with System 2. |

| Complex | System 1 and System 2 | Set solution boundaries. Develop scenarios with System 1. Test and assess scenarios with System 2. Pick optimum scenario. May require multiple iterations. |

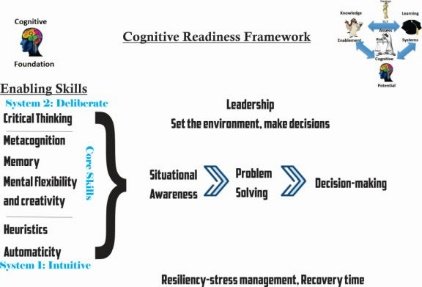

The Cognitive Framework shown in Figure 2 shows how System 1 and System 2 fit into an integrated decision support approach to address complicated and complex problems..

The foundational components on the left are based on a paper by Morrison and Fletcher on Cognitive Readiness (Morrison & Fletcher, 2002). This framework updates them somewhat for current research and shows how they fit into a System 1 and System 2 model and integrates them into a holistic approach to decision-making, as shown in Figure 2.

Figure 2 Cognitive Readiness Framework

The goal is to use critical thinking to test biases and relevant ranges for heuristics. Ideally, we initially engage System 1 thinking to generate initial solutions and then System 2 thinking to refine and test the solutions to select the best, time permitting. Figure 2 also reflects the effects of Design Thinking and Scientific/Operational/Technical decision-making. While Design Thinking has a process, for many, it is a softer process, so it potentially blends elements of System 1 side of the decision-making process. Depending upon organizational culture, the Design Thinking spiral icon could sit between the System 1 and System 2 icons or on either side. We cannot discount the impact of organizational culture.

Literature Review

Akinci and Sadler-Smith (2018) write about the intuitive aspects of decision-making, which can bypass structured decision-making processes. They focus on the collaborative aspects of knowledge-building that can create a group intuition and learning. They wrote, “Organizational learning occurs as a result of cognitions initiated by individuals’ intuitions transcending to the group level by way of articulations and interactions between the group members through the processes of interpreting (cf. ‘externalization’) and integrating, and which become institutionalized at the organization level” (Akinci & Sadler-Smith, 2019, p. 560). Akinci and Sadler-Smith then bring in Wieck’s work and the concept of System 1 and System 2 thinking. The intuitive decision-making is a System 1, and the structured approach is a System 2 approach.

Lawson et al. (2020) and Arnott and Gao (2019) bring in System 1 and System 2, also discussed as thinking fast and thinking slow. Lawson et al. focus more on individual decisions, which may or may not require a decision-making system. They find that having the “right” rules and cognitive faculties are key to effective decision-making as well as mitigating decision bias. Arnott and Gao discuss these in terms of Behavioral economics (BE) and discuss ways to incorporate BE into structured decision-making and Decision Support Systems (DSS). They cite the work of Nobel Prize winner Daniel Kahneman in this field and cite his work on bounded rationality and how it affects decision-making. In this work, Kahneman also discusses System 1 and System 2 thinking and the concept of cognitive biases. An effective decision-making system must recognize cognitive biases.

McKenzie at al. (2011) discuss how knowledge management (KM) may help mitigate bias and facilitate other aspects of decision-making. Like Lawson et al. and Arnott and Goa, they cite Kahneman a great deal. They discuss the impact of emotions and biases on rational choice and how KM can mitigate it. Table II in their paper provides KM approaches to common cognitive biases (McKenzie et al., 2011, p. 408). Ghasemaghaei (2019) concurs that KM is an integral part of decision-making and discusses how KM can help to manage data’s variety, volume, and velocity. Their findings also show data analytic tools enhance the sharing of knowledge sharing improves decision-making quality.

Cognitive biases impact both deliberate and intuitive decision-making and individual and group decision-making. Both Lawson et al. and Arnott and Gao note that rules may facilitate decision-making and mitigate bias. Rules may be heuristics or automatically applied rules. Heuristics are highly correlated with System 1 decisions, but groups can use them in System 2 as well. They can also be part of the model. The model’s rules may be static or adaptive. In a static model, planners and data scientists must manually adjust the rules. In an adaptive model, Machine Learning (ML) or Artificial Intelligence (AI) may change the rules as it learns the system. However, as Ntoutsi et al. note (2020) and Righetti et al. (2019), even AI systems may have their own sets of biases.

Shrestha et al. (2019) note AI’s impact on organizational decision-making and discusses key factors, among which are large data sets and cost. Samek and Muller note they can also become black boxes if users do not understand how ML and AI work and the assumptions built into them. While AI and ML have potential, their costs and complexity may exceed the capabilities and capacities of smaller organizations.

Dogma and belief and mental models are essentially System 1 thinking masquerading as System 2 thinking. In a paper on policy legitimization, Jensen (Jensen, 2003, pp. 524-525) notes, “Cognitive legitimacy can occur when a policy is “taken for granted,” when it is viewed as necessary or inevitable (Suchman 1995). In some cases, cultural models that provide justification for the policy and its objectives may be in place. In other cases, “taken-for-granted” legitimacy rises to a level where dissent is not possible because an organization, innovation, or policy is part of the social structure (Scott 1995).”The cognitive framework must help decision-makers understand when System 1 thinking drives System 2 type decisions and biases and heuristics inherent in System 1 thought shape or even cut off discourse and dialog. This is also vital to an effective Community of Practice.

Pöyhönen (Pöyhönen, 2017) and Baggio et al. (Baggio et al., 2019) both show that cognitive diversity is valuable in complex and difficult problem sets, but less so in more routine cases. However, they do not explicitly state whether they focus their study on System 1 and System 2 decisions. Stankovich and West (2000, p. 658) define System 1 as subconscious decisions dominated by heuristics, and System 2 as deliberate decisions based on an assessment of alternatives. Their research shows that 80% of the decisions people make are System 1 decisions. Therefore, these two papers potentially miss a critical area of decision-making—the everyday decisions that execute an operational plan. This is especially true with subconscious biases that can sway both System 1 and System 2 thinking. Without cognitive diversity, these biases may be completely overlooked and never be challenged.

As we continue to refine our approaches to conflict, more research into trust, adaptive leadership, and adaptive cultures may help to validate Olson et al.’s approach to competence-based trust. This could be combined with research on the relationship between cognitive diversity and complex problems and validating the value of cognitive diversity in mitigating the affects of bias on System 1 thinking. Does trust help identify hidden biases?If so cognitive diversity shows relevance for both System 1 and System 2 thinking, regardless of the level of complexity and difficulty.

Selected Bibliography

Akinci, C., & Sadler-Smith, E. (2019). Collective Intuition: Implications for Improved Decision Making and Organizational Learning. British Journal of Management, 30(3), 558–577. https://doi.org/10.1111/1467-8551.12269.

Arnott, D., & Gao, S. (2019). Behavioral economics for .decision support systems researchers. Decision Support Systems, 122(February), 113063. https://doi.org/10.1016/j.dss.2019.05.003’

Aven, T. (2018). How the integration of System 1-System 2 thinking and recent risk perspectives can improve risk assessment and management. Reliability Engineering and System Safety, 180(July), 237–244. https://doi.org/10.1016/j.ress.2018.07.031.

Ghasemaghaei, M. (2019). Does data analytics use improve firm decision making quality? The role of knowledge sharing and data analytics competency. Decision Support Systems, 120(January), 14–24. https://doi.org/10.1016/j.dss.2019.03.004.

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar, Straus, Giroux.

Jensen, J. L. (2003). Policy Diffusion through Institutional Legitimation: State Lotteries. Journal of Public Administration Research and Theory, 13(4), 521–541. https://doi.org/10.1093/jpoart/mug033.

Lawson, M. A., Larrick, R. P., & Soll, J. B. (2020). Comparing fast thinking and slow thinking: The relative benefits of interventions, individual differences, and inferential rules. Judgment and Decision Making, 15 (5), 660–684.

McKenzie, J., van Winkelen, C., & Grewal, S. (2011). Developing organisational decision-making capability: A knowledge manager’s guide. Journal of Knowledge Management.

Morrison, J. E., & Fletcher, J. D. (2002). Cognitive Readiness. October, (October), 48. Retrieved from http://www.dtic.mil/cgi-bin/GetTRDoc?AD=ADA417618.

Ntoutsi, E., Fafalios, P., Gadiraju, U., Iosifidis, V., Nejdl, W., Vidal, M. E., Ruggieri, S., Turini, F., Papadopoulos, S., Krasanakis, E., Kompatsiaris, I., Kinder-Kurlanda, K., Wagner, C., Karimi, F., Fernandez, M., Alani, H., Berendt, B., Kruegel, T., Heinze, C., … Staab, S. (2020). Bias in data-driven artificial intelligence systems—An introductory survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 10(3), 1–14. https://doi.org/10.1002/widm.1356.

Pöyhönen, S. (2017). Value of cognitive diversity in science. Synthese, 194(11), 4519–4540. https://doi.org/10.1007/s11229-016-1147-4.

Righetti, L., Madhavan, R., & Chatila, R. (2019). Unintended Consequences of Biased Robotic and Artificial Intelligence Systems [Ethical, Legal, and Societal Issues]. IEEE Robotics and Automation Magazine, 26(3), 11–13. https://doi.org/10.1109/MRA.2019.2926996..

SCOTT, W. R. (2014). W. Richard SCOTT (1995), Institutions and Organizations. Ideas, Interests and Identities. Management, 17(2). https://doi.org/10.3917/mana.172.0136.

Shrestha, Y. R., Ben-Menahem, S. M., & von Krogh, G. (2019). Organizational Decision-Making Structures in the Age of Artificial Intelligence. California Management Review, 66–83. https://doi.org/10.1177/0008125619862257.

Stanovich, K. E., & West, R. F. (2003). “Individual differences in reasoning: Implications for the rationality debate? Behavioral and Brain Sciences, 26(4), 527. https://doi.org/10.1017/S0140525X03210116.